Why do you need FAIR data?

|

|

For many organizations, the idea of adopting FAIR can be confusing and daunting. Over the coming weeks, we’ll present a series of blogs to help demystify FAIR. In this series, we’ll cover topics including how ontologies provide the key to being FAIR, and how FAIR enables you to get more value from your data.

Why all the interest in FAIR?

Aligned with an ambition to become more data-driven, there is a pharmaceutical industry-wide interest in adopting the FAIR principles – so-called ‘FAIRification’ – to improve data quality, avoid repeating work, and enable better-informed decision-making. Well-structured, standardized data is also a prerequisite for the adoption of the latest cutting-edge AI technologies – the 2023 Global Data survey puts AI as the most impactful technology for pharma for the fourth year running.

Like all good initiatives, the FAIR principles are grounded in common sense – after all, what use is data that you can’t find, access, integrate, or that can’t be re-used? Yet for many companies, that is the reality for a large proportion of the data at their disposal.

Data generators often do not include sufficient metadata about their data for others to find, access, and reuse it. When data is not well described, it also makes it difficult to understand its original purpose and interpret it correctly, presenting a barrier to its use and limiting the value that can be gained from it. In fact, a European Commission report estimates that inefficiencies and other issues related to not having FAIR research data costs the European economy at least €10.2 billion each year. When taking into account the resulting impact on research quality, economic turnover, and machine readability, that figure increases to €26 billion per year.

How often have you searched for something and had to iterate through repeated attempts to get to the right answer? In science, it’s pretty common that, for example, a search for novel research related to ‘muscarinic acetylcholine receptor M1’ will not find articles that refer to the commonly used synonym ‘cholinergic receptor muscarinic 1’. Manually searching a range of sources with all possible synonyms is both error-prone and resource intensive. All that time adds up. In fact, the same European Commission report estimates that data that is not findable, understandable or has incomplete metadata contributes 43.81% to the cost of not having FAIR data, equating to €4.4 billion wasted each year.

The International Data Corporation (IDC) predicts that organizations that are able to analyze all relevant data and deliver actionable information will achieve significant productivity benefits over their less analytically-oriented peers. Essentially, organizations that don’t adopt FAIR principles are putting themselves at a disadvantage compared to their more ‘data savvy’ peers.

What are the implications for suppliers to the pharma industry?

The trend to outsource continues to gain pace within many Pharmaceutical and Biotech companies. However, while this often helps reduce costs and increase flexibility and agility from an organizational perspective, it also presents a challenge to bring together internal and external data.

For example, if an external research organization (often called a Contract Research Organization or CRO) uses their own scheme or vocabulary for assay names, then it will be difficult to integrate the data they produce with internal assay data. To address this problem, Pharmaceutical and Biotech companies are increasingly looking to data producers, whether that is CROs, publishers, or other suppliers, to provide content in a FAIR way to simplify the process of harmonizing internal and external data.

So, for example, CROs that annotate their analytical results using public standards, such as the BioAssay Ontology (BAO), will have a competitive advantage. It seems likely that many Pharma companies will ultimately make the provision of FAIR data a prerequisite for doing business.

Learn more about FAIR principles in our roundtable discussion – Best practice, observations, and realization.

Starting your FAIR data journey

One of the cornerstones of the FAIR data principles is the use of ontologies to assign a unique identifier and description to scientific terms so that they can be understood as “things, not strings”, a process also known as semantic enrichment. So, for example, rather than being a random string of letters, the term ‘NIDDM’ can be unambiguously understood as referring to the indication ‘non-insulin-dependent diabetes.’

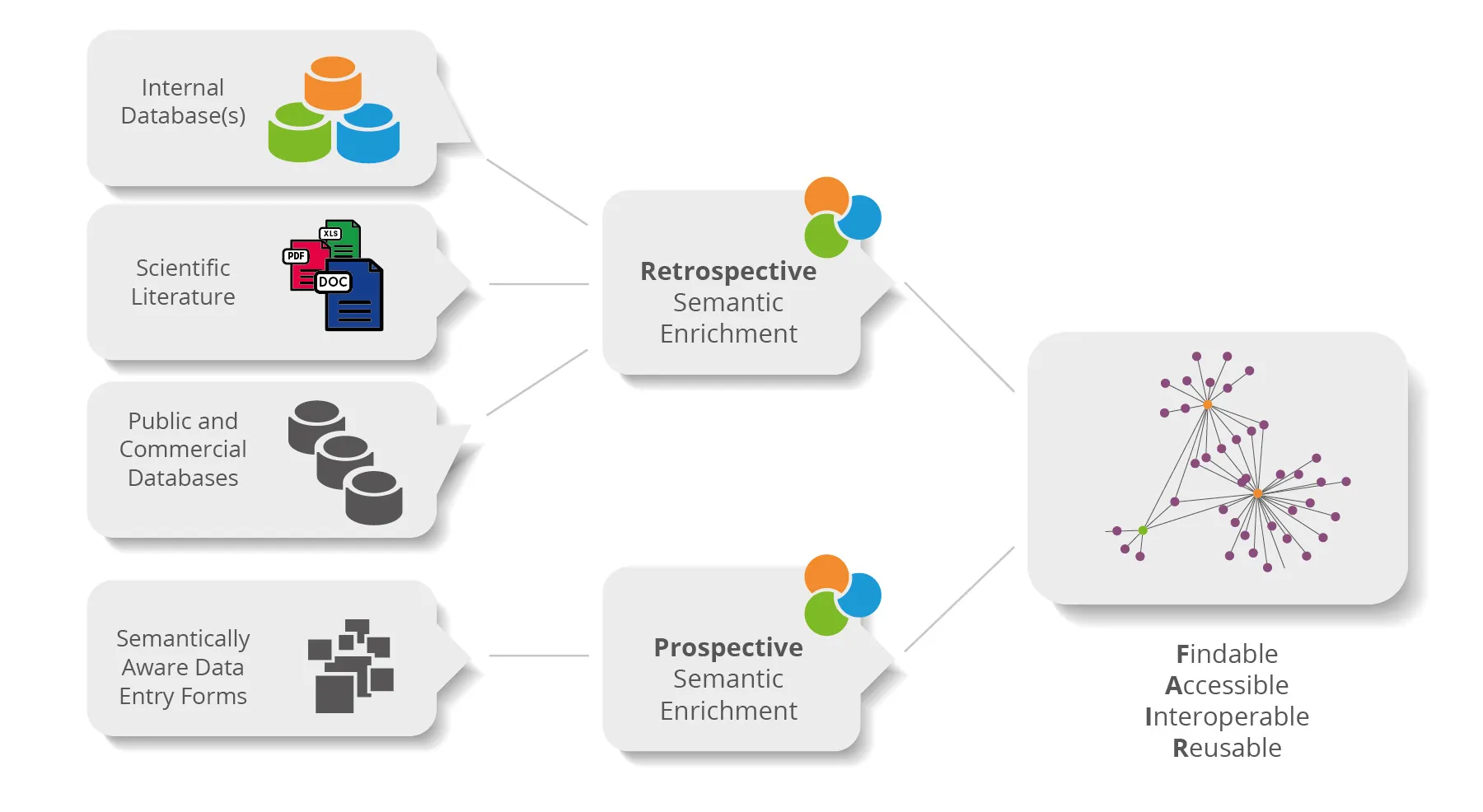

We’ll discuss the broader role of ontologies in the FAIRification of data in more detail in our next blog. But for now, let’s consider the two main types of data that benefit from application of the FAIR principles – newly created data and legacy data.

The goal for newly created data should be to apply semantic enrichment prospectively. By aligning fields in a data entry form with public standard ontologies, for example, by linking them to SciBite’s ontology management platform, CENtree, they become semantically aware, making it easy for authors to capture data that is ‘born FAIR’.

But what about legacy data? It has been estimated that at least 80% of the business-relevant information in any organization is stored as unstructured text – the very definition of unFAIR data. However, all is not lost. SciBite’s Named Entity Recognition (NER) engine, TERMite, can be used to apply semantic enrichment retrospectively, transforming unstructured scientific text into a structure and endowing text entries with an explicit, specific meaning.

Through this ‘FAIR-ification’ process, legacy data can be used in the same way as data that was born FAIR, opening up new possibilities to mine existing sources more effectively and derive valuable new insights from it.

Figure 1: Retrospective and prospective semantic enrichment of a range of disparate, unstructured, structured, and semi-structured data sources

Summary

The FAIR principles are not only common sense, they need to be an integral part of any organization’s data strategy.

SciBite’s unique combination of retrospective and prospective semantic enrichment enables organizations to apply FAIR principles to their data and mitigate the significant costs of not having FAIR research data.

In the next blog in this series, we’ll explain how ontologies are the key to making both new and existing data FAIR. In the meantime, contact us at [email protected] to find out how we can help you on your FAIR data journey.

About Jane Lomax

Head of Ontologies, SciBite

Jane leads the development of SciBite’s vocabularies and ontology services. With a Ph.D. in Genetics from Cambridge University and 15 years of experience working with biomedical ontologies, including at the EBI and Sanger Institute, she focussed on bioinformatics and developing biomedical ontologies. She has published over 35 scientific papers, mainly in ontology development.

Other articles by Jane

1. [Webinar] Introduction to ontologies Watch on demand

2. [Blog] A day with the FAIRplus project: Implementing FAIR data principles read more.

3. [Blog] Using ontologies to unlock the full potential of your scientific data – Part 1 read more.

4. [Blog] Using ontologies to unlock the full potential of your scientific data – Part 2 read more.

5. [Blog] How biomedical ontologies are unlocking the full potential of biomedical data read more.

Related articles

-

Bringing FAIR data and CMC procedures together

In this blog we introduce our new package of vocabularies designed to enable the FAIR data principles and help pharmaceutical companies navigate their documents with respect to Chemistry, Manufacture and Control (CMC) procedures.

Read -

Large language models (LLMs) and search; it’s a FAIR game

Large language models (LLMs) have limitations when applied to search due to their inability to distinguish between fact and fiction, potential privacy concerns, and provenance issues. LLMs can, however, support search when used in conjunction with FAIR data and could even support the democratisation of data, if used correctly…

Read

How could the SciBite semantic platform help you?

Get in touch with us to find out how we can transform your data

© Copyright © 2024 Elsevier Ltd., its licensors, and contributors. All rights are reserved, including those for text and data mining, AI training, and similar technologies.